- Introduction

- Where is the code?

- Demo Video

- Prerequisites

- How do I run all this stuff?

- Known issues

- Some basics

- The app

- Routing

- Some common React / Bootstrap UI components

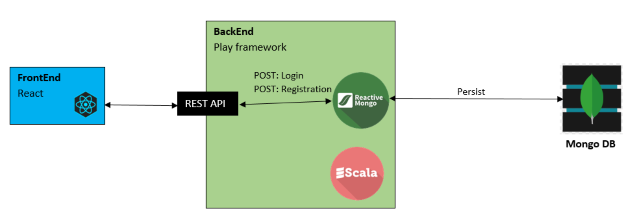

- Registration workflow

- Passenger registration

- Driver registration

- Login React component

- Json Support

- Login route

- Login controller

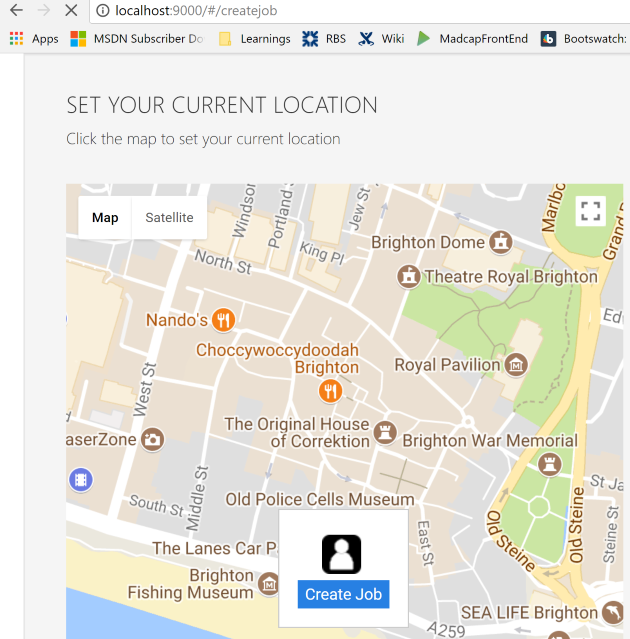

- CreatJob React component

- JSON Payload

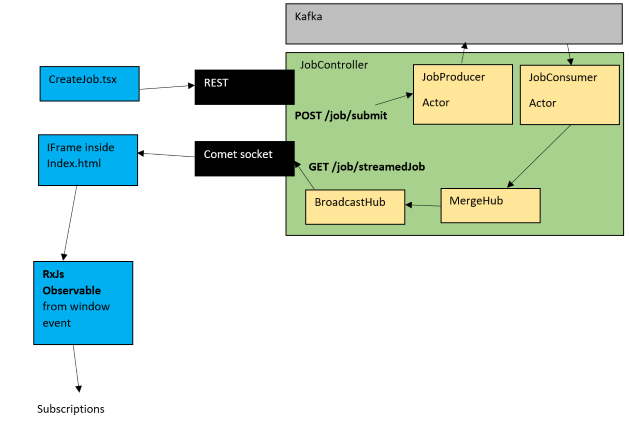

- Push the consumed Job out of the forever frame (Comet functionality in Play backend)

- Have a new RxJs based Observable over the comet based forever frame, and ensure that is working

- Final diagram to help you solidify this section

- ViewJob react component

- So what should the View Job page do?

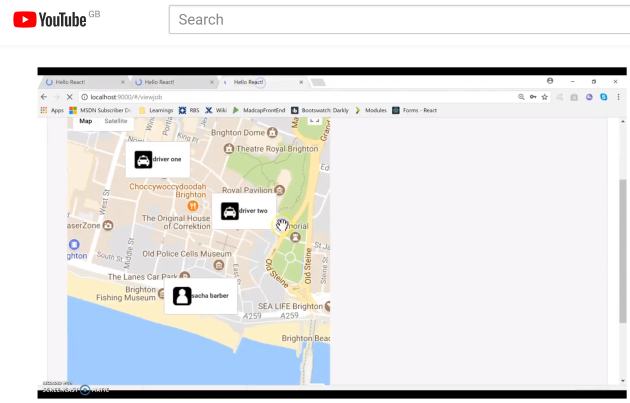

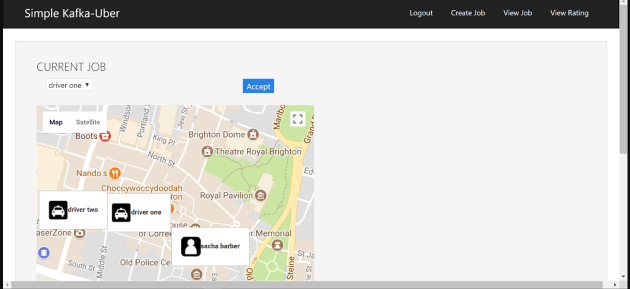

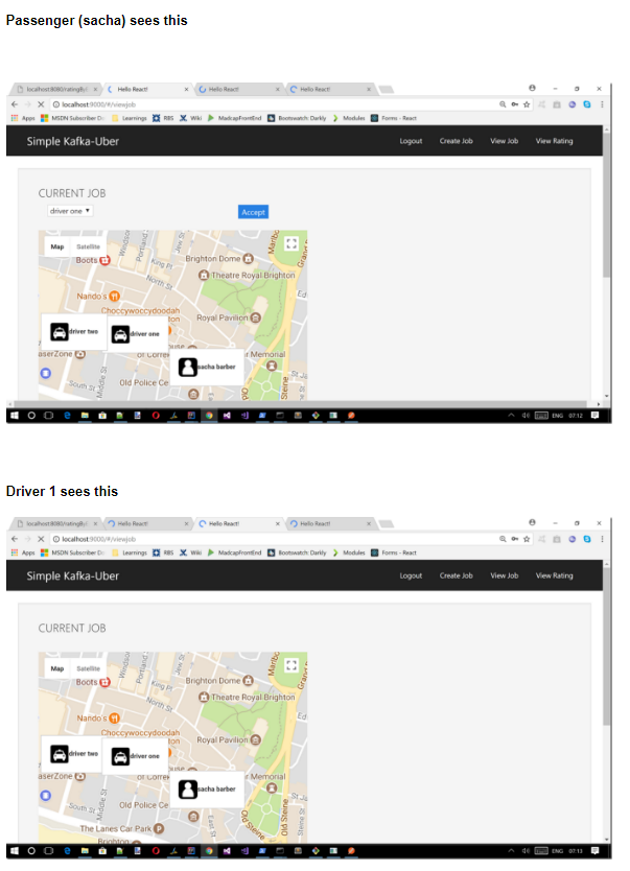

- What does it look like when run?

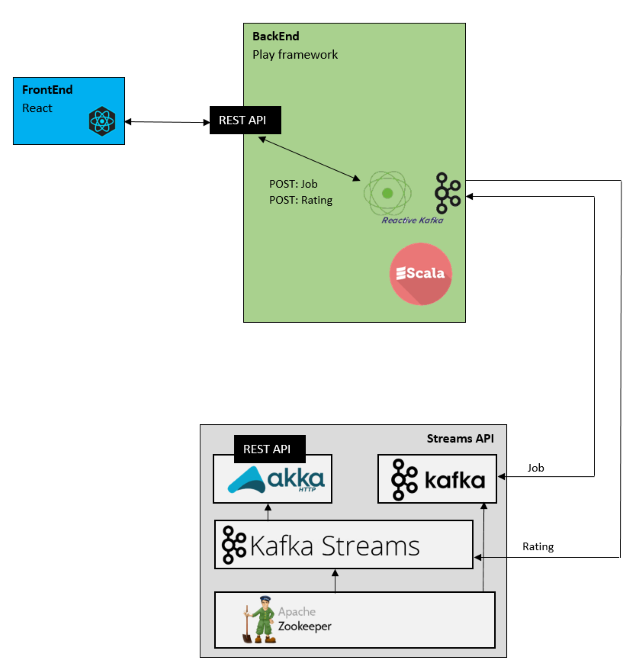

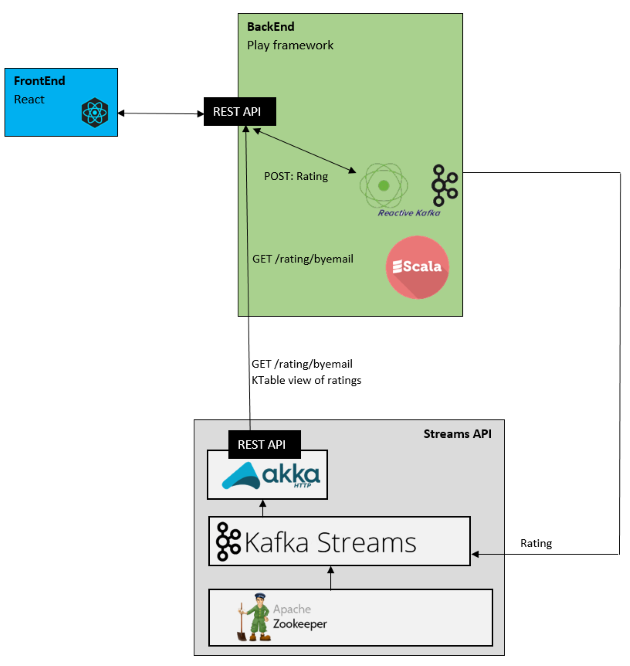

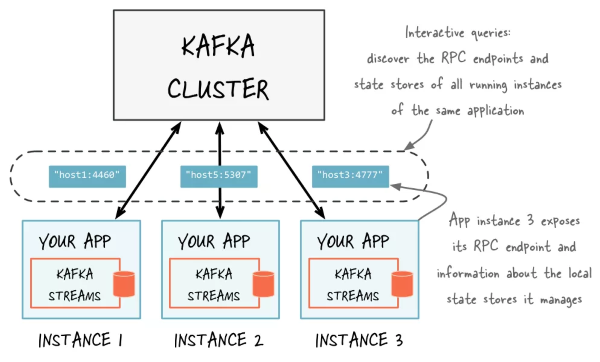

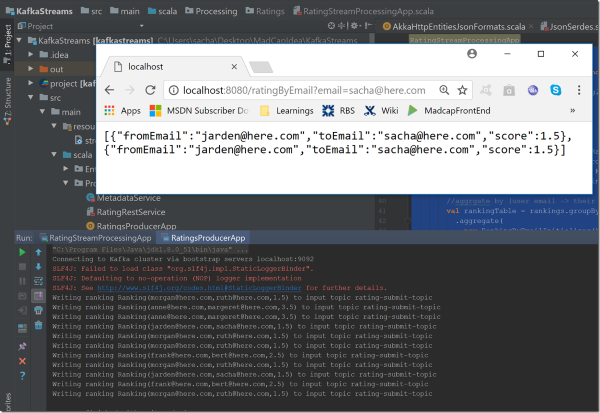

- Walking through Kafka Streams interactive queries

- REST Endpoint Facade

- Controller Action

- React front end for ratings

- Login workflow

- CreateJob workflow

- ViewJob workflow

- ViewRating workflow

- Conclusion

Introduction

This article is the culmination of stuff I have been doing on the train on my way to work over 6 months. I have written quite a lot of blog posts on this already which you can read about on the projects home page on my blog : https://sachabarbs.wordpress.com/2017/05/01/madcap-idea/

There are 13 blog posts there, but I thought it would be good to also have this overall article which covers all of it, as the blog posts are more a sequence of events that I went through which talk about the pieces in great detail as I went through them

For example these were the blog posts

- MADCAP IDEA PART 1 : START OF THE CLIENT SIDE PORTION OF THE WEB SITE

- MADCAP IDEA PART 2 : ADDING DI/IOC TO THE CLIENT SIDE FRONT END WEB SITE

- MADCAP IDEA PART 3 : BRINGING PLAY BACK END INTO THE FOLD + SOME BASIC STREAMING

- MADCAP IDEA PART 4 : PROTOTYPING THE SCREENS

- MADCAP IDEA PART 5 : ADDING REACT-ROUTER

- MADCAP IDEA PART 6 : STATIC SCREEN DESIGN

- MADCAP IDEA PART 7 : REGISTRATION/LOGIN BACKEND

- MADCAP IDEA PART 8 : INTERMEDIATE STEP, REST API FOR INTERACTIVE KAFKA STREAM KTABLE QUERIES

- MADCAP IDEA PART 9 : KAFKA STREAMS INTERACTIVE QUERIES

- MADCAP IDEA PART 10 : PLAY FRAMEWORK REACTIVE KAFKA PRODUCER

- MADCAP IDEA PART 11 : FINISHING THE VIEW RATING PAGE

- MADCAP IDEA PART 12 : GETTING THE CREATE JOB TO WORK ENDEND

- MADCAP IDEA PART 13 : GETTING THE VIEW JOB TO WORK END-END

Special Thanks

I would like to call out a special thanks to a very special man, Peter O'Hanlon code project legend and all round awesome dude. Who I asked to review this behemoth article, Pete immediately said yes, and found time to make that happen, depsite the fact he is a family man runs his own business and has his own cool ideas going on.

Thanks Pete, I salute you

So just exactly what is it that I was/am talking about?

In essence I want to write a very (pardon the pun) but uber simple uber type app. Where there are the following functional requirements

- There should be a web interface that a client can use. Clients may be a driver or a pickup client requiring a delivery

- There should be a web interface that a pickup client can use that shows a pickup client location on a map, which the pickup client chooses.

- The pickup client may request a pickup job, in which case drivers that are in the area bid for a job.

- The pickup client location should be visible to a driver on a map

- A driver may bid for a pickup client job, and the bidding driver(s) location should be visible to the pickup client.

- The acceptance of the bidding driver is down to the pickup client

- Once a pickup client accepts a driver, ONLY the assigned driver(s) current map position will be shown to the pickup client

- When a pickup client is happy that they have been picked up by a driver, the pickup client may rate the driver from 1-10, and the driver may also rate the pickup client from 1-10.

- The rating should only be available once a pickup client has marked a job as completed

- A driver or a pickup client should ALWAYS be able to view their previous ratings.

Whilst this may sound childs play to a lot of you (me included if I stuck to using simply CRUD operations), I just want to point out that this app is meant as a learning experience so I will not be using a simple SignalR Hub, and a couple of database tables.

I have written this project using a completely different set of technologies from the norm. Some of the technology choices could easily scale to hundreds of thousands of requests per second (Kafka has your back here)

TECHNOLOGIES INVOLVED

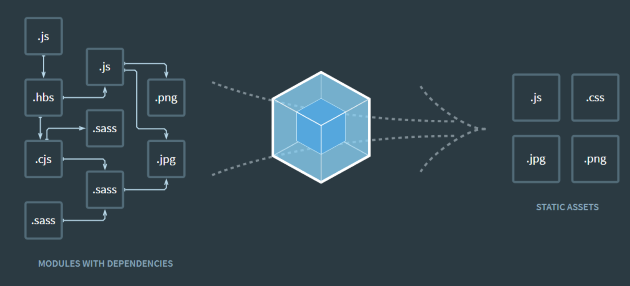

- WebPack

- React

- React Router

- PowerShell

- TypeScript

- Babel.js

- Akka

- Scala

- Play (Scala Http Stack)

- MongoDB

- SBT

- Kafka

- Kafka Streams

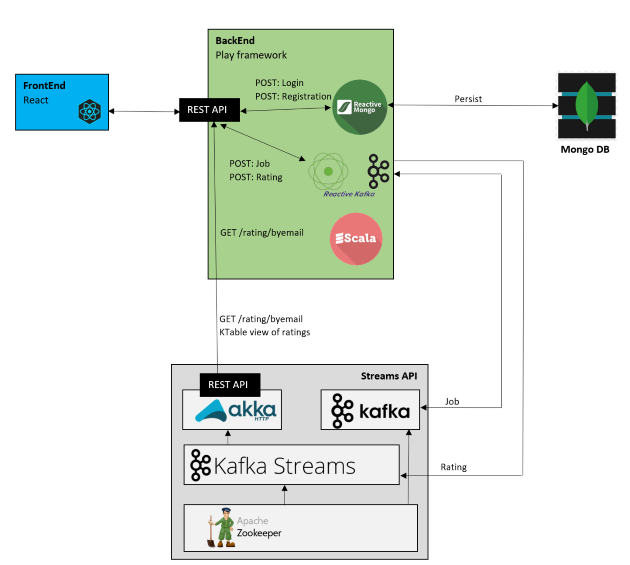

They say a picture says a 1000 words, so here is a nice picture to get things started

Now before we carry on, let me just acknowledge that www.codeproject.com is mainly biased towards Microsoft tech and this code is mainly Scala/TypeScript. However I think there is still plenty to learn along the way, so don't let the fact it's not .NET/C/C++ put you off

Where is the code?

The code for this lot is here : https://github.com/sachabarber/MadCapIdea

Demo Video

CLICK THE IMAGE TO LAUNCH THE VIDEO

Prerequisites

As shown in the introduction section, there are many moving peices to this demo so, naturally, there are quite a few dependencies. I did try to get it to work in Docker for you, however I found that I still needed to create external scripts to orchestrate it all anyway. In the end I just went with downloading stuff as I will specify, and then giving a single PowerShell script to run most stuff, apart from 2 Scala projects.

This is the list of stuff you will need in order to run this code:

This has all been developed on Windows, so these instructions are all about how to get stuff working on Windows

- MongoDB : https://www.mongodb.com/dr/fastdl.mongodb.org/win32/mongodb-win32-x86_64-2008plus-ssl-3.4.6-signed.msi/download

- Confluence Platform 3.3.0 Open Source : http://packages.confluent.io/archive/3.3/confluent-oss-3.3.0-2.11.zip

- SBT

- Java 8 SDK

- Webpack

- Node.Js

- NPM

- IntelliJ IDEA v17.0 community

- PowerShell

Once you have downloaded all of this you will need to do a few things in order to run it nicely on Windows.

- Download the dependencies above (Keep a note of where you downloaded them as you will need them here and later)

- Replace the official YOUR extract location\confluent-3.3.0\bin\windows BAT files with the ones found here :https://github.com/renukaradhya/confluentplatform/tree/master/bin/windows

- Modify the YOUR extract location\confluent-3.3.0\etc\kafka\zookeeper.properties file to change the dataDirto something like dataDir=c:/temp/zookeeper

- Modify the YOUR extract location\confluent-3.3.0\etc\kafka\server.properties file to uncomment the line delete.topic.enable=true

- Modify the YOUR extract location\confluent-3.3.0\etc\kafka\server.properties file file to change the log.dirsto log.dirs=c:/temp/kafka-logs

You will need to remember some of these paths for the next section too, so just be mindful that you may have to edit the PowerShell script later with some new paths

How do I run all this stuff?

There are quite a few moving peices to this app, and they all need to be running in order for it to all work together.

1. Update node.js dependencies

Make sure you have Node.Js installed, and make sure NPM is installed too, also ensure that webpack is globally installed

Open command line and change to the MadCapIdea\PlayBackEndApi\FrontEndWebSite\ folder and run npm install

- now run

webpackfrom same folder

2. Kafka/Zookeeper etc etc

You can run the following powershell script to get all the pre-requistites up and running (assuming you have downloaded them all)

- Open PowerShell command line and change to the

PowerShellProject\PowerShellProject\folder and run.\RunPipeline.ps1. Make sure you modify the top section paths to match your own installation paths

3. Play application

- Open the SBT/Scala project inside IntelliJ IDEA (you will need the SBT plugin, and Java8 installed on your machine).

- Open this folder

MadCapIdea\PlayBackEndApiand run it.You may need to create a run time configuration

4. Kafka Streams application

- Open the SBT/Scala project inside IntelliJ IDEA (you will need the SBT plugin, and Java8 installed on your machine).

- Open this folder

MadCapIdea\KafkaStreamsand run it.You may need to create a run time configuration where you point to this main classRatingStreamProcessingApp

5. React

- Open a browser navigate to

http://localhost:9000, and register some users both passenger/driver

I normally follow this set of steps afterwards

- open a tab, login as a passenger that I had created

- go to the "create job" page, click the map, push the "create job" button

- open a NEW tab, login as a new driver, go to the "view job" page

- on the 1st tab (passenger) click the map to push passenger position to driver

- on the 2nd tab (driver) click the map to push driver position to passenger

- repeat last 4 steps for additonal driver

- on client tab pick driver to accept, click accept button

- complete the job from client tab, give driver rating

- complete the job from paired driver tab, give passenger rating

- go to "view rating" page, should see ratings

Known issues

The following are known issues

- Once a driver and passenger become paired, the position updates from either are no longer reflected. I am sure this would boil down to a single JavaScript method that needs updating in the

ViewJob.tsxfile. - On the ViewJob page I originally wanted the passenger to be able to accept a driver by clicking a button in the drivers Overlay GoogleMap component. However no matter what I tried this causes a MapClick event to still happen, which changed the passengers position. So I had to resort to using a weird drop down select way for a passenger to accept a driver. This sucked, but meh

So those are the issues, I just kind of got to the end of a very long road (I have been writing about this on an off for 6 months of train rides, as well as not caring at all, and just staring into space) and was just happy that I got 99% of the stuff done that I set out to do. I just thought you know what, the app as it is now, demonstrates everything I set out to do, so I'm ok with 1-2 known issues.

Some basics

Before we dive into the actual code for the app (and there is quite a bit of it), I just thought it may be good to go over some of the individual building blocks that make up the app as a whole first, the next few sections will do that. It is a long list of stuff, and the demo app makes use of all of this plus a few more bits, that I deemed to be not important enough to warrant their own sections. We will learn more about each of these when we walk through the actual demo app code, this is more of an overiew of the various parts before we look at the specific usage

What is Kafka?

Overview

Apache Kafka is an open-source stream processing platform developed by the Apache Software Foundation written in Scala and Java. The project aims to provide a unified, high-throughput, low-latency platform for handling real-time data feeds. Its storage layer is essentially a "massively scalable pub/sub message queue architected as a distributed transaction log," making it highly valuable for enterprise infrastructures to process streaming data. Additionally, Kafka connects to external systems (for data import/export) via Kafka Connect and provides Kafka Streams, a Java stream processing library.

The design is heavily influenced by transaction logs.

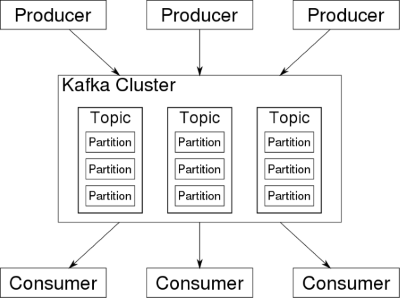

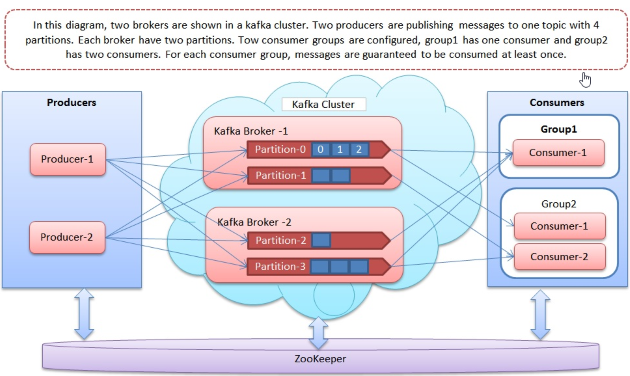

Apache Kafka Architecture

Kafka stores messages which come from arbitrarily many processes called "producers". The data can thereby be partitioned in different "partitions" within different "topics". Within a partition the messages are indexed and stored together with a timestamp. Other processes called "consumers" can query messages from partitions. Kafka runs on a cluster of one or more servers and the partitions can be distributed across cluster nodes.

Apache Kafka efficiently processes the real-time and streaming data when used along with Apache Storm, Apache HBase and Apache Spark. Deployed as a cluster on multiple servers, Kafka handles its entire publish and subscribe messaging system with the help of four APIs, namely, producer API, consumer API, streams API and connector API. Its ability to deliver massive streams of message in a fault-tolerant fashion has made it replace some of the conventional messaging systems like JMS, AMQP, etc.

The major terms of Kafka's architecture are topics, records, and brokers. Topics consist of stream of records holding different information. On the other hand, Brokers are responsible for replicating the messages.

- Producer API - Permits the applications to publish streams of records. (covered in this article)

- Consumer API - Permits the application to subscribe to the topics and processes the stream of records. (covered in this article)

- Streams API This API converts the input streams to output and produces the result. (covered in this article)

- Connector API Executes the reusable producer and consumer APIs that can link the topics to the existing applications. (not covered in this article)

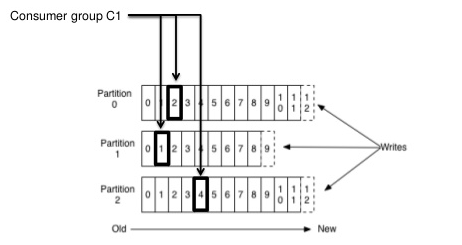

Anatomy of a Kafka Topic

Offset : messages in the partitions are each assigned a unique (per partition) and sequential Id, called the "offset". The "offset" is tracked by consumers, where each consumer tracks via (offset, partition, topic) tuples

Consumer Groups

Consumers label themselves with a consumer group name, and each record published to a topic is delivered to one consumer instance within each subscribing consumer group. Consumer instances can be in separate processes or on separate machines.

If all the consumer instances have the same consumer group, then the records will effectively be load balanced over the consumer instances.

If all the consumer instances have different consumer groups, then each record will be broadcast to all the consumer processes

A two server Kafka cluster hosting four partitions (P0-P3) with two consumer groups. Consumer group A has two consumer instances and group B has four.

Kafka Performance

Due to its widespread integration into enterprise-level infrastructures, monitoring Kafka performance at scale has become an increasingly important issue. Monitoring end-to-end performance requires tracking metrics from brokers, consumer, and producers, in addition to monitoring ZooKeeper which is used by Kafka for coordination among consumers

https://en.wikipedia.org/wiki/Apache_Kafka up on date 02/01/18

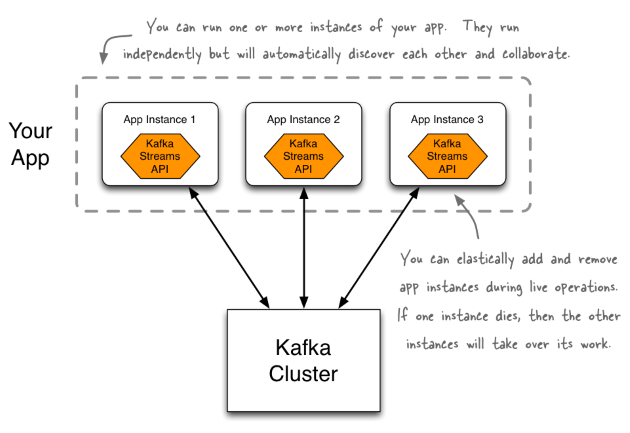

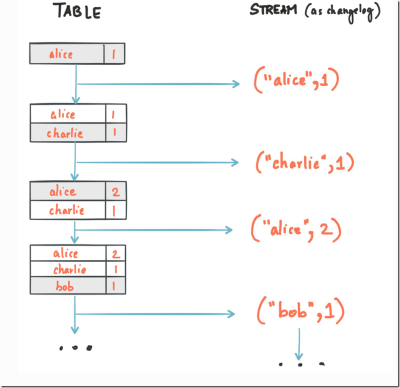

What is Kafka Streams?

The Streams API of Apache Kafka, available through a Java library, can be used to build highly scalable, elastic, fault-tolerant, distributed applications and microservices. First and foremost, the Kafka Streams API allows you to create real-time applications that power your core business. It is the easiest yet the most powerful technology to process data stored in Kafka. It builds upon important concepts for stream processing such as efficient management of application state, fast and efficient aggregations and joins, properly distinguishing between event-time and processing-time, and seamless handling of late-arriving and out-of-order data.

A unique feature of the Kafka Streams API is that the applications you build with it are normal Java applications. These applications can be packaged, deployed, and monitored like any other Java application there is no need to install separate processing clusters or similar special-purpose and expensive infrastructure!

An application that uses the Kafka Streams API is a normal Java application. Package, deploy, and monitor it like you would do for any other Java application. Even so, your application will be highly scalable, elastic, and fault-tolerant.

https://docs.confluent.io/current/streams/introduction.html up on date 02/01/18

So that is what the official docs say about it. Here is my take on it

Kafka Streams is an additional API on top of Kafka that allows you to perform many aggregate and filtering, time based windowing operations over the incoming messages that can either be stored to an internal database key-value representation known as a KTable which uses a state store (based on RocksDB), or you may choose to push the transformed stream values out to a new output topic.

You can perform complex stream processing, merge streams, and also store accumulated stream state.

It is a an AWESOME bit of kit

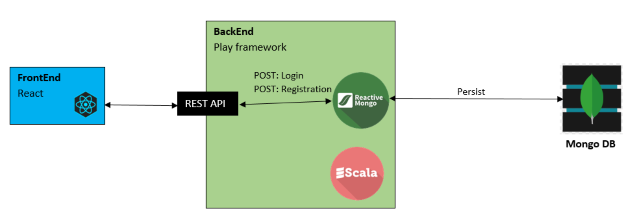

What is Play?

The Play Framework is a Scala based MVC (model view controller) type web application framework. As such it has in built mechanisms for things typical of a MVC web framework (certainly if you have done any ASP MVC .NET you would find it very familiar).

So we have the typical MVC concerns covered by the Play Framework

- Controllers

- Actions

- Routing

- Model binding

- JSON support

- View engine

Thing is, I will not be doing any actual HTML in the Play Framework back end code, I want to do all of that using the previously covered webpack/typescript/react starter code I have shown so far. Rather I will be using the Play Framework as a API backend, where we will simply be using various controllers as endpoint to accept/serve JSON, and Event streamed data. All the actual front end work/routing will be done via webpack and React.

There are still some very appealing parts in Play that I did want to make use of, such as:

- It is Scala, which means when I come to integrate Kafka / Kafka Streams it will be ready to do so

- It uses Akka which I wanted to use. I also want to use Akka streams, which Play also supports

- Play controllers lend themselves quite nicely to being able to create a fairly simple REST API

- It can be used fairly easily to serve static files (think of these as the final artifacts that come out of the webpack generation pipeline). So things like minimized CSS / JS etc etc

So hopefully you can see that using Play Framework still made a lot of sense, even if we will only end up using 1/2 of what it has to offer. To be honest the idea of using controllers for a REST API is something that is done in ASP MVC .NET all time either by using of actual controllers or by using the WebApi.

Ok so now that we know what we will be using Play Framework for, how about we dive into the code for this post.

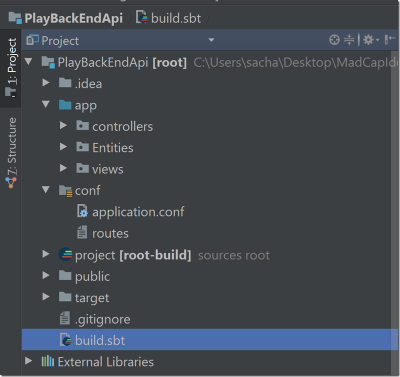

Play Framework Basics

Lets start by looking at the bare bones structure of a Play Framework application, which looks like this (I am using IntelliJ IDEA as my IDE)

Lets talk a bit about each of these folders

app

This folder would hold controllers/views (I have added the Entities folder there that is not part of a Play Framework application requirements). Inside the controllers folder you would find controllers, and inside the views folder you would find views . For the final app there will be no views folder, I simply kept that in this screenshot to talk about what a standard Play Framework application looks like

conf

This folder contains the configuration for the Play Framework application. This would include the special routes file, and any other application specific configuration would might have.

Lets just spend a minute having a look at the Play Framework routes file, which you can read more about here : https://www.playframework.com/documentation/2.5.x/ScalaRouting

The routes file has its own DSL, that is responsible for matching a give route with a controller + controller action. The controller action that matches the route is ultimately responsible for servicing the http request. I think the DSL shown in routes file below is pretty self explanatory with perhaps the exception of the assets based routes.

All assets based http requests (ie ones that start with /assets for example http://localhost:9000/assets/images/favicon.png would actually be routed through to a special controller called Assets. You dont see any code for this one, its part of the Play Framework application codebase. This special Assets inbuilt play controller is responsible for serving up static data files which it expects to find in the public folder. So for example our initial request of http://localhost:9000/assets/images/favicon.png would get translated into this file (relative path from project root) /public/images/favicon.png. As I say this is handled for you by the special Assets built in controller.

The only other funky part to the Assets based route is that it uses a *file in its route. Which essentially boils down to the play framework being able match a multi-part path. Which we actually just saw with the example above http://localhost:9000/assets/images/favicon.png , see how that contains not only the file name, but also a directory of images . The Assets controller + routing is able to deal with that path just fine.

# Routes # This file defines all application routes (Higher priority routes first) # ~~~~ # Home page GET / controllers.HomeController.index() GET /scala/comet/liveClock controllers.ScalaCometController.streamClock() GET /scala/comet/kick controllers.ScalaCometController.kickRandomTime() # Map static resources from the /public folder to the /assets URL path GET /assets/*file controllers.Assets.at(path="/public", file)

Ok so moving on to the rest of the standard folders that come with a Play Framework application

public

This is where you will need to put any static content that you wish to be served. Obviously views (if you use that part of play) will be within in the app/views folder. Like I say I am not using the views aspect of Play so you will not be seeing any views in my views folder. I instead want to let webpack et all generate my routing, web page etc etc. I do however want to serve bundles so later on I will be showing you how my webpack generated bundles fit in with the Play Framework ecco system.

target

Since this is a scala based project we get the standard scala based folders, and target is one of them, that has the generated/compiled code in it.

SBT

It is worth pointing out that my Play Framework application is an SBT based project, as such there is an SBT aspect to it, which largely boils down to these files

Project [root-build] / plugs.sbt file

This file adds Play as a plugin for the SBT project

// The Lightbend repository resolvers += Resolver.typesafeRepo("releases") // Use the Play sbt plugin for Play projects addSbtPlugin("com.typesafe.play" % "sbt-plugin" % "2.5.14")

build.sbt

This is the main SBT build file for the project. This is where all our external dependencies are brought in etc etc (standard SBT stuff)

import play.sbt._ import sbt.Keys._ import sbt._ name := "play-streaming-scala" version := "1.0-SNAPSHOT" scalaVersion := "2.11.11" lazy val root = (project in file(".")).enablePlugins(play.sbt.PlayScala) javacOptions ++= Seq("-source", "1.8", "-target", "1.8", "-Xlint") initialize := { val _ = initialize.value if (sys.props("java.specification.version") != "1.8") sys.error("Java 8 is required for this project.") }

So I think that covers the basics of a standard Play Framework application, the remainder of this article will cover the actual code for the demo project, where we will dive into routes/controllers etc etc

What is Akka

Akka is a great framework for building distibuted fault tolerant apps. It is built around the concept of actors, and has failure in mnid from the outset. It also comes with suport for HTTP over actors, and comes with play suport out of the box. One thing that also comes with Akka that I make use of is its reactive streams API. This is something like Rx whre you build streams of data that are also fault tolerant, and also feature back pressure to ensure a consumer is not over burdened by a fast producer. I have written a lot about Akka before which you can read more about here : https://sachabarbs.wordpress.com/akka-series/ where I cover quite a lot of Akkas functionality

What is WebPack?

We are headed towards an era where browsers will natively support modules, and dependencies but we are not there yet. Over the years there have been many attempts at JavaScript module management, and dependency tracking of related files, such as

- ASP MVC bundles (fairly simplistic only bundles no other features)

- CommonJs (require syntax, which is fairly intrusive in how your JS files work)

- Browserify (again fairly intrusive in how your JS files are loaded and what they need to manage dependencies)

- NPM (not really a module manager as such more like NuGet for installing packages)

- SystemJS (has import/export syntax but a bit more syntax than Webpack)

- JSPM uses SystemJS (fairly nice but not in as much use as Webpack)

- Various task runners can be used such as Grunt/Gulp to build bundles

All of these have there pros/cons, however I think that it is fair to say that WebPack has emerged as the defacto standard module manager for JavaScript (for now anyway), that supports rich dependency graphs and bundles and also supports things like

- Transpilers (such as Babel/Typescript/SASS/SCSS/LESS etc etc)

- Sourcemaps (to go from your transpiled JS back to what you wrote)

- Minification

- File hashing (to allow browsers to load latest bundles as it has a different hash)

This is kind of what you get with webpack, where it is very clever about preserving the dependency graph and the bundles created, and allows you to use things like export and import to manage your inter dependencies

What is Babel?

Babel is a very neat JavaScript library that allows you to use next generation JavaScript syntax now, even if your targetted browser doesn't suport the syntax you are trying to use. Is is installed via the Node Packager Manager (NPM) and is one part of you transpilation pipeline. For this article we push stuff through TypeScript transpiler -> Babel transpiler and the final result is JavaScript that can be sent ot the browser.

Using babel this is the sort of things you will be able to do, where native browser support for these may be varied, or not supported at all

What is SASS/SCSS/LESS

I guess if you have used HTML you will have certainluy used CSS. Now CSS is great, but it lacks certain things such as

- Variables

- Heirachy selectors

- Mixins

This is something that SASS/SCSS fixes. In much the same way that things like Typescript are transpiled to JavaScript, SASS/SCSS is transpiled to CSS.

By using SCSS you are now able to do things like this, note the variables

$blue: #3bbfce; $margin: 16px; .content_navigation { border-color: $blue; color: darken($blue, 10%); } .border { padding: $margin / 2; margin: $margin / 2; border-color: $blue; }What is React?

Unless you have been living under a rock you will have heard/used/seen React. React is maintained and developed by Facebook, and it is a JavaScript library for building user interfaces. It is not as much as a framework as say something like Angular is, and it is really just the "View" part of the typical MVC puzzle that is so prevelant in modern JS UI libraries/frameworks. It is declarative, and component centric. It is accepted that a React app would be made up of many smaller components. I can't give you a full walkthrough of React here, but we will certainly see more React code/components as the article demo code is dissected below

What is TypeScript?

Typescript is a Microsoft offering that attempts to bring better typings and other useful constructs/language features to JavaScript. As TypeScript is a superset of JavaScript, existing JavaScript programs are also valid TypeScript programs. Typescript files will get transpiled into regular javascript files via a Typescript compiler (or in the case of WebPack via a Webpack loader)

Some of the features that TypeScript brings to the table are

- Types

- Interfaces

- Parameter types

- Enums

- Generics

The demo article that goes with this makes a lot of use of TypeScript (particularly TSX which is a TypeScript verison of a React JSX file), and will also make use of a nice Inversion Of Control container for TypeScript called Inversify.

The app

The next set of sections will walk through the demo app. I have decided to take a workflow approach to describing the app, and as such will walk/talk through each of the workflows, which I think is the best way to do it

The app has MANY moving parts, but can largely be broken down into the following areas

- Play backend API (scala)

- React front end (TypeScript)

- Kafka Streams (scala)

We will get to all of these parts, but before that let's examine some of the stuff that helps the front end work get of the ground

NPM requirements

The demo project uses quite a few components, such as

- TypeScript

- React

- Babel

- RX

- SCSS

- Various other libraries

As such we need a way to pull all these packages into use, so for that and the front end side of things we use NPM which you will need to have installed. The following shows the NPM dependencies for the demo app PlayBackEndApi/FrontEndWebSite/package.json file

Copy Code

Copy Code{ "name": "task1webpackconfig", "version": "1.0.0", "description": "webpack 2 + TypeScript 2 + Babel example", "repository": { "type": "git", "url": "git+https://github.com/sachabarber/MadCapIdea.git" }, "keywords": [ "babel", "typescript", "webpack", "bundling", "javascript", "npm" ], "author": "sacha barber", "homepage": "https://github.com/sachabarber/MadCapIdea#readme", "dependencies": { "bootstrap": "^3.3.7", "inversify": "^4.1.0", "jquery": "^3.2.1", "lodash": "^4.17.4", "react": "^15.5.4", "react-bootstrap": "^0.28.1", "react-bootstrap-validation": "^0.1.11", "react-dom": "^15.5.4", "react-google-maps": "^7.0.0", "react-measure": "^2.0.2", "react-router": "^3.0.5", "react-stars": "^2.1.0", "reflect-metadata": "^0.1.10", "revalidator": "^0.3.1", "rx": "^4.1.0", "webpack": "^2.5.1", "webpack-merge": "^4.1.0" }, "devDependencies": { "@types/jquery": "^2.0.43", "@types/lodash": "^4.14.63", "@types/react": "^15.0.24", "@types/react-dom": "^15.5.0", "@types/rx": "^4.1.1", "awesome-typescript-loader": "^3.1.3", "babel-core": "^6.24.1", "babel-loader": "^7.0.0", "babel-preset-es2015": "^6.24.1", "babel-preset-es2015-native-modules": "^6.9.4", "babel-preset-react": "^6.24.1", "css-loader": "^0.28.1", "extract-text-webpack-plugin": "^2.1.0", "file-loader": "^0.11.1", "html-webpack-plugin": "^2.28.0", "node-sass": "^4.5.2", "on-build-webpack": "^0.1.0", "sass-loader": "^6.0.3", "source-map-loader": "^0.2.1", "typescript": "^2.3.2", "url-loader": "^0.5.8", "webpack": "^2.4.1" }, "scripts": { "build-dev": "webpack -d --config webpack.develop.js", "build-prod": "webpack --config webpack.production.js" } }Babel config

As stated above the demo project makes use of Babeljs.io to use future state JavaScript now. As such we also need to supply a small Babel config file (PlayBackEndApi/FrontEndWebSite/.babelrc), this is shown below, where we opt to use the ES2015/React presets

{ "presets": ["es2015","react"] }TypeScript config

The demo also makes use of TypeScript which also need some specific configuration. As such we also need to supply a small TypeScript config file (PlayBackEndApi/FrontEndWebSite/tsconfig.json), this is shown below, the full set of TypeScript options can also be found here :https://www.typescriptlang.org/docs/handbook/tsconfig-json.html

{ "compilerOptions": { "allowSyntheticDefaultImports": true, "moduleResolution": "node", "outDir": "./dist/", "sourceMap": true, "noImplicitAny": false, "module": "es2015", "target": "es5", "lib": ["es6", "dom"], "jsx": "react", "experimentalDecorators": true, "emitDecoratorMetadata": true, "types" : ["jquery", "lodash", "react", "react-dom", "reflect-metadata"] }, "include": [ "./src/**/*" ] }Sourcemap files

Sourcemaps are a magical thing that allow you to write your JavaScript in TypeScript say which is then possibly run through other transpilers (such as Babel) and then webpack where it is bundled according to your rules, where you then send the JavaScript to the browser. That sounds great, but do you write code write code first time, I don't, and constantly need to debug stuff.

So if I wrote stuff in TypeScript and that gets turned into plain vanilla JavaScript, how on earth do I make sense of the stuff that is in the browser?

Well that is where sourcemaps come in, the literally send a clever map that allows you to put a break point into your source code (which will be sent to the browser, ideally only in development phases) and it will know how to translate that breakpoint into the correct place/line in the transpiled vanilla JavaScript that the browser is using.

Webpack has in built support for sourcemaps (phew)

The best write up of source maps I have seen is this one :https://www.html5rocks.com/en/tutorials/developertools/sourcemaps/

It should be noted that SourceMaps should only be used in development phases, as in production you should/would want to minify your JavaScript too.

How does Webpack/Play work with this?

WebPack has this concept of loaders that are what gets used to load different file types. So for example you can use a TypeScript loader that gets piped through a babel loader, where the final result will be vanilla JavaScript.

- You can also control how you final bundle will work, what name it has, where is will be generated etc etc

- How minification will work

- Configure loaders

- Turn on SourceMap

- Configure things like jquery and lodash to appear in the usual places as

$and_respectively

Perhaps its best to see the demo codes WebPack file which looks like this

PlayBackEndApi/FrontEndWebSite/webpack.config.js

Copy Code

Copy Codelet _ = require('lodash'); let webpack = require('webpack'); let path = require('path'); let fs = require("fs"); let WebpackOnBuildPlugin = require('on-build-webpack'); let ExtractTextPlugin = require('extract-text-webpack-plugin'); let HtmlWebpackPlugin = require('html-webpack-plugin'); let babelOptions = { "presets": ["es2015", "react"] }; function isVendor(module) { return module.context && module.context.indexOf('node_modules') !== -1; } let entries = { index: './src/index.tsx', indexCss: './scss/index.scss' }; //build it to the Play Framework public folder, which is services by the assets controller let buildDir = path.resolve(__dirname, '../public/dist'); module.exports = { context: __dirname, entry: entries, output: { filename: '[name].bundle.[hash].js', path: buildDir, //this is to make it play nice with the Play Framework Assets controllers //that deals with static data publicPath: '/assets/dist' }, // these break for node 5.3+ when building WS stuff node: { fs: 'empty' }, watch: true, devServer: { open: true, // to open the local server in browser contentBase: __dirname, }, // Enable sourcemaps for debugging webpack's output. devtool: "source-map", resolve: { extensions: [".tsx", ".ts", ".js", ".jsx"], modules: [path.resolve(__dirname, "src"), "node_modules"] }, plugins: [ //The ProvidePlugin makes a module available as a variable in every other //module required by webpack new webpack.ProvidePlugin({ $: "jquery", jQuery: "jquery", "window.jQuery": "jquery" }), // creates a common vendor js file for libraries in node_modules new webpack.optimize.CommonsChunkPlugin({ names: ['vendor'], minChunks: function (module, count) { return isVendor(module); } }), // creates a common vendor js file for libraries in node_modules new webpack.optimize.CommonsChunkPlugin({ name: "commons", chunks: _.keys(entries), minChunks: function (module, count) { return !isVendor(module) && count > 1; } }), //will unlink unused files on a build //http://stackoverflow.com/questions/40370749/how-to-remove-old-files-from-the-build-dir-when-webpack-watch new WebpackOnBuildPlugin(function (stats) { const newlyCreatedAssets = stats.compilation.assets; const unlinked = []; fs.readdir(path.resolve(buildDir), (err, files) => { files.forEach(file => { if (file != "fonts") { if (!newlyCreatedAssets[file]) { fs.unlink(path.resolve(buildDir + '\\' + file)); unlinked.push(file); } } }); if (unlinked.length > 0) { console.log('Removed old assets: ', unlinked); } }) }), //scss/sass files extracted to common css bundle new ExtractTextPlugin({ filename: '[name].bundle.[hash].css', allChunks: true, }), new HtmlWebpackPlugin({ filename: 'index.html', template: 'template.html', }) ], module: { rules: [ // All files with a '.ts' or '.tsx' extension will be handled by 'awesome-typescript-loader' 1st // then 'babel-loader' // NOTE : loaders run right to left (think of them as a cmd line pipe) { test: /\.ts(x?)$/, exclude: /node_modules/, use: [ { loader: 'babel-loader', options: babelOptions }, { loader: 'awesome-typescript-loader' } ] }, // All files with a .css extenson will be handled by 'css-loader' { test: /\.css$/, loader: ExtractTextPlugin.extract(['css-loader?importLoaders=1']), }, // All files with a .scss|.sass extenson will be handled by 'sass-loader' { test: /\.(sass|scss)$/, loader: ExtractTextPlugin.extract(['css-loader', 'sass-loader']) }, // All files with a '.js' extension will be handled by 'babel-loader'. { test: /\.js$/, exclude: /node_modules/, use: [ { loader: 'babel-loader', options: babelOptions } ] }, { test: /\.png$/, loader: "url-loader?limit=100000" }, { test: /\.jpg$/, loader: "file-loader" }, { test: /\.woff(\?.*)?$/, loader: 'url-loader?prefix=fonts/&name=fonts/[name].[ext]&limit=10000&mimetype=application/font-woff' }, { test: /\.woff2(\?.*)?$/, loader: 'url-loader?prefix=fonts/&name=fonts/[name].[ext]&limit=10000&mimetype=application/font-woff2' }, { test: /\.ttf(\?.*)?$/, loader: 'url-loader?prefix=fonts/&name=fonts/[name].[ext]&limit=10000&mimetype=application/octet-stream' }, { test: /\.eot(\?.*)?$/, loader: 'file-loader?prefix=fonts/&name=fonts/[name].[ext]' }, { test: /\.svg(\?.*)?$/, loader: 'url-loader?prefix=fonts/&name=fonts/[name].[ext]&limit=10000&mimetype=image/svg+xml' }, // All output '.js' files will have any sourcemaps re-processed by 'source-map-loader'. { enforce: "pre", test: /\.js$/, loader: "source-map-loader" } ] } };Of particular note is this part

output: { filename: '[name].bundle.[hash].js', path: buildDir, //this is to make it play nice with the Play Framework Assets controllers //that deals with static data publicPath: '/assets/dist' }What that is doing is using a hash for the file name, such that the browser would immediately see a change in the file, so would reload it, and not cache old JavaScript. The other part of this is where the file gets generated. As this "front end" part still needs to be hosted in back end (Play framework for this article) we need to ensure that the final JavaScript ends up in a location that the Play framework is able to render. For this article this means the static Play framework route which is configured as follows in the PlayBackEndApi/routes file in the PlayBackEndApi project

GET /assets/*file controllers.Assets.at(path="/public", file)

PlayBackEndApi/FrontEndWebSite/webpack.develop.js

Where we might have a specialized develop phase variant (see how we use the base file webpack.config.js as a base) file that looks like this

let commonConfig = require('./webpack.config.js'); let webpack = require('webpack'); let Merge = require('webpack-merge'); module.exports = function (env) { return Merge(commonConfig, {}) }PlayBackEndApi/FrontEndWebSite/webpack.production.js

Where we might have a specialized production phase variant (see how we use the base file webpack.config.jsas a base) file that looks like this, where we do things like this to make the emitted JavaScript production like

- Minify it

- Strip comments

- Strip console.log (some browsers don't have it)

- Don't enable SourceMaps

Copy Code

Copy Codelet commonConfig = require('./webpack.config.js'); let webpack = require('webpack'); let Merge = require('webpack-merge'); module.exports = function (env) { return Merge(commonConfig, { plugins: [ new webpack.LoaderOptionsPlugin({ minimize: true, debug: false }), new webpack.optimize.UglifyJsPlugin({ // Eliminate comments comments: false, beautify: false, mangle: { screw_ie8: true, keep_fnames: true }, compress: { screw_ie8: true, // remove warnings warnings: false, // Drop console statements drop_console: true }, comments: false, sourceMap: false }) ] }) }The SPA

So the React front end is really a Single Page Application, as such you can expect to find a single page. So just where is that single page?

We we need to go back to some of the stuff in the webpack.config.js file, specifically these bits

//build it to the Play Framework public folder, which is services by the assets controller let buildDir = path.resolve(__dirname, '../public/dist'); output: { filename: '[name].bundle.[hash].js', path: buildDir, //this is to make it play nice with the Play Framework Assets controllers //that deals with static data publicPath: '/assets/dist' }, .... .... .... .... new HtmlWebpackPlugin({ filename: 'index.html', template: 'template.html', })What this is doing is saying that Webpack will try and generate a file called index.html by using a template called template.html and the final file will be put in a relative path called /assets/dist, which if you go back a bit was mapped using the following in the PlayBackEndApi/routes file in the PlayBackEndApiproject

GET /assets/*file controllers.Assets.at(path="/public", file)

PlayBackEndApi/FrontEndWebSite/template.html

So what does the template.html file look like, well here it is, where it also includes a CDN references to Google maps API as we use that in this article

<!DOCTYPE html> <html> <head> <meta charset="UTF-8" /> <title>Hello React!</title> <script src="https://maps.googleapis.com/maps/api/js?key=AIzaSyBVtreRNA537_WsNSn2_kOiz3Xhm8w6pEo" type="text/javascript"></script> </head> <body> <div> <iframe id="comet" src="/job/streamedJob"></iframe> </div> <div id="root"></div> <!-- Main --> </body> </html> PlayBackEndApi/public/dist/index.html

So after WebPack has worked its magic, what does the final file look like. Well it looks like this

<!DOCTYPE html> <html> <head> <meta charset="UTF-8" /> <title>Hello React!</title> <script src="https://maps.googleapis.com/maps/api/js?key=AIzaSyBVtreRNA537_WsNSn2_kOiz3Xhm8w6pEo" type="text/javascript"></script> <link href="/assets/dist/vendor.bundle.5c5feaa8663412cf31c5.css" rel="stylesheet"> <link href="/assets/dist/indexCss.bundle.5c5feaa8663412cf31c5.css" rel="stylesheet"></head> <body> <div> <iframe id="comet" src="/job/streamedJob"></iframe> </div> <div id="root"></div> <!-- Main --> <script type="text/javascript" src="/assets/dist/vendor.bundle.5c5feaa8663412cf31c5.js"></script> <script type="text/javascript" src="/assets/dist/index.bundle.5c5feaa8663412cf31c5.js"></script> </body> </html> See how the correct Css and JavaScript files have been placed into the HEAD/Script tags automatically, this is great I think, and they also have nice hashes as part of the file name, to allow the browser caches to be easily invalidated

Play "Home" route

So that's great but how does this page get served up. Well the trick to that lies in this Play framework route entry

# Home page GET / controllers.HomeController.index()

Which has this server side code to serve the route

package controllers import javax.inject.Inject import play.api.mvc.{Action, Controller} class HomeController @Inject() (environment: play.api.Environment) extends Controller { def index() = Action { val fullpath = s"${environment.rootPath}\\public\\dist\\index.html" val htmlContents = scala.io.Source.fromFile(fullpath).mkString Ok(htmlContents).as("text/html") } }

Routing

As I stated above this demo is a Singlle Page App (SPA) type of app. So how is that done. That is done in this file PlayBackEndApi/FrontEndWebSite/src/index.tsx, which is also set as an entry point in the WebPack webpack.config.js file.

let entries = { index: './src/index.tsx', ..... ..... ..... ..... }; //build it to the Play Framework public folder, which is services by the assets controller let buildDir = path.resolve(__dirname, '../public/dist'); module.exports = { context: __dirname, entry: entries, ..... ..... ..... ..... ..... }PlayBackEndApi/FrontEndWebSite/src/index.tsx

Where the actual routing work is done like this, where the main top level components will be

- Login

- Register

- Logout

- CreateJob

- ViewJob

- ViewRating

Some of which are conditional routes depending on whether you are logged in or not.

Copy Code

Copy Codeimport * as React from "react"; import * as ReactDOM from "react-dom"; import 'bootstrap/dist/css/bootstrap.css'; import { Nav, Navbar, NavItem, NavDropdown, MenuItem, Button } from "react-bootstrap"; import { Router, Route, hashHistory } from 'react-router' import { Login } from "./Login"; import { Logout } from "./Logout"; import { Register } from "./Register"; import { CreateJob } from "./CreateJob"; import { ViewJob } from "./ViewJob"; import { ViewRating } from "./ViewRating"; import { ContainerOperations } from "./ioc/ContainerOperations"; import { AuthService } from "./services/AuthService"; import { JobService } from "./services/JobService"; import { JobStreamService } from "./services/JobStreamService"; import { PositionService } from "./services/PositionService"; import { TYPES } from "./types"; import Rx from 'rx'; let authService = ContainerOperations.getInstance().container.get<AuthService>(TYPES.AuthService); let jobService = ContainerOperations.getInstance().container.get<JobService>(TYPES.JobService); let jobStreamService = ContainerOperations.getInstance().container.get<JobStreamService>(TYPES.JobStreamService); let positionService = ContainerOperations.getInstance().container.get<PositionService>(TYPES.PositionService); jobStreamService.init(); export interface MainNavProps { authService: AuthService; jobService: JobService; jobStreamService: JobStreamService; positionService: PositionService; } export interface MainNavState { isLoggedIn: boolean; } class MainNav extends React.Component<MainNavProps, MainNavState> { private _subscription: any; constructor(props: any) { super(props); console.log(props); this.state = { isLoggedIn: false }; } componentWillMount() { this._subscription = this.props.authService.getAuthenticationStream() .subscribe(isAuthenticated => { this.state = { isLoggedIn: isAuthenticated }; if (this.state.isLoggedIn) { hashHistory.push('/createjob'); } else { hashHistory.push('/'); } }); } componentWillUnmount() { this._subscription.dispose(); } render() { return ( this.state.isLoggedIn ? <Navbar collapseOnSelect> <Navbar.Header> <Navbar.Brand> <span>Simple Kafka-Uber</span> </Navbar.Brand> <Navbar.Toggle /> </Navbar.Header> <Navbar.Collapse> <Nav pullRight> <NavItem eventKey={2} href='#/logout'>Logout</NavItem> <NavItem eventKey={2} href='#/createjob'>Create Job</NavItem> <NavItem eventKey={2} href='#/viewjob'>View Job</NavItem> <NavItem eventKey={2} href='#/viewrating'>View Rating</NavItem> </Nav> </Navbar.Collapse> </Navbar> : <Navbar pullRight collapseOnSelect> <Navbar.Header> <Navbar.Brand> <span>Simple Kafka-Uber</span> </Navbar.Brand> <Navbar.Toggle /> </Navbar.Header> <Navbar.Collapse> </Navbar.Collapse> </Navbar> ) } } class App extends React.Component<undefined, undefined> { render() { return ( <div> <div> <MainNav authService={authService} jobService={jobService} jobStreamService={jobStreamService} positionService={positionService} /> {this.props.children} </div> </div> ) } } ReactDOM.render(( <Router history={hashHistory}> <Route component={App}> <Route path="/" component={Login} authService={authService} /> <Route path="/register" component={Register} authService={authService} /> <Route path="/logout" component={Logout} authService={authService} jobService={jobService} positionService={positionService} /> <Route path="/createjob" component={CreateJob} authService={authService} jobService={jobService} positionService={positionService} /> <Route path="/viewjob" component={ViewJob} authService={authService} jobService={jobService} jobStreamService={jobStreamService} positionService={positionService} /> <Route path="/viewrating" component={ViewRating} authService={authService} /> </Route> </Router> ), document.getElementById('root'));This makes use of ReactRouter version 3.0.5 to do the routing within the SPA, and also make use of React-Bootstrap for rendering the NavBar

This TypeScipt also hooks up a RxJS subcription to determine whether you are logged in/out, this is done using the following injectable service PlayBackEndApi/FrontEndWebSite/src/services/AuthService.ts

Copy Code

Copy Codeimport { injectable, inject } from "inversify"; import { TYPES } from "../types"; import Rx from 'rx'; @injectable() export class AuthService { private _isAuthenticated: boolean; private _authenticatedSubject = new Rx.Subject<boolean>(); constructor() { } clearUser = (): void => { this._isAuthenticated = false; sessionStorage.removeItem('currentUserProfile'); this._authenticatedSubject.onNext(false); } storeUser = (currentProfile: any): void => { if (currentProfile == null || currentProfile == undefined) return; this._isAuthenticated = true; sessionStorage.setItem('currentUserProfile', JSON.stringify(currentProfile)); this._authenticatedSubject.onNext(true); } userName = (): string => { var userProfile = JSON.parse(sessionStorage.getItem('currentUserProfile')); return userProfile.user.fullName; } user = (): any => { var userProfile = JSON.parse(sessionStorage.getItem('currentUserProfile')); return userProfile.user; } userEmail = (): string => { var userProfile = JSON.parse(sessionStorage.getItem('currentUserProfile')); return userProfile.user.email; } isDriver = (): boolean => { var userProfile = JSON.parse(sessionStorage.getItem('currentUserProfile')); return userProfile.isDriver; } isAuthenticated = (): boolean => { return this._isAuthenticated; } getAuthenticationStream = (): Rx.Observable<boolean> => { return this._authenticatedSubject.asObservable(); } }

Some common React / Bootstrap UI components

As with any UI work, you will inevitably end up where you need some core components that you re-use over and over again. For the demo app I had these 3 reusable React components that make use of React/React-Bootstrap

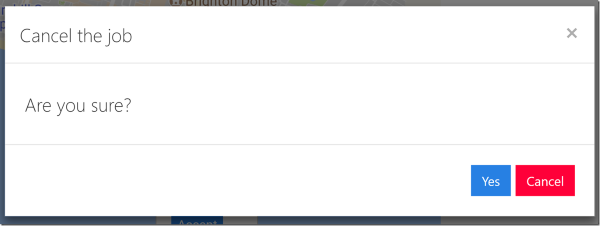

YesNoDialog

This represents a generic re-usable yes/no dialog that can be triggered, here is the code for this one. The important part is that the various prop values can be controlled via the parent component state values

Copy Code

Copy Codeimport * as React from "react"; import * as ReactDOM from "react-dom"; import * as _ from "lodash"; import 'bootstrap/dist/css/bootstrap.css'; import { Button, Modal } from "react-bootstrap"; //TODO : Fix this export interface YesNoDialogProps { headerText: string; theId: string; launchButtonText: string; yesCallBack: any; noCallBack: any; } export interface YesNoDialogState { showModal: boolean; } export class YesNoDialog extends React.Component<YesNoDialogProps, YesNoDialogState> { constructor(props) { super(props); console.log(this.props); //set initial state this.state = { showModal: false }; } _yesClicked = () => { this.setState({ showModal: false }); this.props.yesCallBack(); } _noClicked = () => { this.setState({ showModal: false }); this.props.noCallBack(); } _close = () => { this.setState({ showModal: false }); } _open = () => { this.setState({ showModal: true }); } render() { return ( <div className="leftFloat"> <Button id={this.props.theId} type='button' bsSize='small' bsStyle='primary' onClick={this._open}>{this.props.launchButtonText}</Button> <Modal show={this.state.showModal} onHide={this._close}> <Modal.Header closeButton> <Modal.Title>{ this.props.headerText }</Modal.Title> </Modal.Header> <Modal.Body> <h4>Are you sure?</h4> </Modal.Body> <Modal.Footer> <Button type='button' bsSize='small' bsStyle='primary' onClick={this._yesClicked}>Yes</Button> <Button type='button' bsSize='small' bsStyle='danger' onClick={this._noClicked}>Cancel</Button> </Modal.Footer> </Modal> </div> ); } }This looks like this when rendered

OkDialog

This represents a generic re-usable ok dialog that can be triggered, here is the code for this one. The important part is that the various prop values can be controlled via the parent component state values

Copy Code

Copy Codeimport * as React from "react"; import * as ReactDOM from "react-dom"; import * as _ from "lodash"; import 'bootstrap/dist/css/bootstrap.css'; import { Button, Modal } from "react-bootstrap"; //TODO : Fix this export interface OkDialogProps { headerText: string; bodyText: string; open: boolean; okCallBack: any; } export interface OkDialogState { showModal: boolean; } export class OkDialog extends React.Component<OkDialogProps, OkDialogState> { constructor(props) { super(props); console.log(this.props); //set initial state this.state = { showModal: false }; } componentDidMount() { if (this.props.open === true) { this.setState({ showModal: true }); } } _okClicked = () => { this.setState({ showModal: false }); this.props.okCallBack(); } _close = () => { this.setState({ showModal: false }); this.props.okCallBack(); } _open = () => { this.setState({ showModal: true }); } render() { return ( <div className="leftFloat"> <Modal show={this.state.showModal} onHide={this._close}> <Modal.Header closeButton> <Modal.Title>{ this.props.headerText }</Modal.Title> </Modal.Header> <Modal.Body> <h4>{this.props.bodyText}</h4> </Modal.Body> <Modal.Footer> <Button type='button' bsSize='small' bsStyle='primary' onClick={this._okClicked}>Ok</Button> </Modal.Footer> </Modal> </div> ); } }This looks like this when rendered

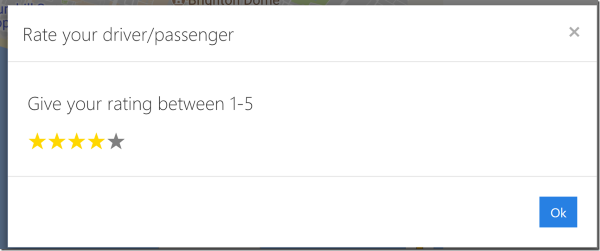

RatingDialog

This represents a generic re-usable rating control where rating can be from 1-5; here is the code for this one. The important part is that the various prop values can be controlled via the parent component state values

Copy Code

Copy Codeimport * as React from "react"; import * as ReactDOM from "react-dom"; import * as _ from "lodash"; import 'bootstrap/dist/css/bootstrap.css'; import { Button, Modal } from "react-bootstrap"; import ReactStars from 'react-stars'; export interface RatingDialogProps { headerText: string; theId: string; okCallBack: any; } export interface RatingDialogState { showModal: boolean; rating: number; } export class RatingDialog extends React.Component<RatingDialogProps, RatingDialogState> { constructor(props) { super(props); console.log(this.props); //set initial state this.state = { showModal: false, rating:0 }; } _close = () => { this.setState( { showModal: false, rating:0 } ); } _open = () => { this.setState( { showModal: true, rating: 0 } ); } _ratingChanged = (newRating) => { console.log(newRating) this.setState( { rating: newRating } ); } _okClicked = () => { this._close(); this.props.okCallBack(); } render() { return ( <div className="leftFloat"> <Button id={this.props.theId} type='button' bsSize='small' bsStyle='primary' onClick={this._open}>Complete</Button> <Modal show={this.state.showModal} onHide={this._close}> <Modal.Header closeButton> <Modal.Title>{ this.props.headerText }</Modal.Title> </Modal.Header> <Modal.Body> <h4>Give your rating between 1-5</h4> <ReactStars count={5} onChange={this._ratingChanged} size={24} color2={'#ffd700'} /> </Modal.Body> <Modal.Footer> <Button type='submit' bsSize='small' bsStyle='primary' onClick={this._okClicked}>Ok</Button> </Modal.Footer> </Modal> </div> );This looks like this when rendered

For the rating component I make use of this React library : https://www.npmjs.com/package/react-stars

Registration workflow

The registration workflow is pretty much as described above, and uses the pieces shown above.

There are 2 types of registration, where we need to capture different data

- Passenger registration

- Driver registration

We will examine the passenger registration in detail, with the driver registration being fairly similar in nature

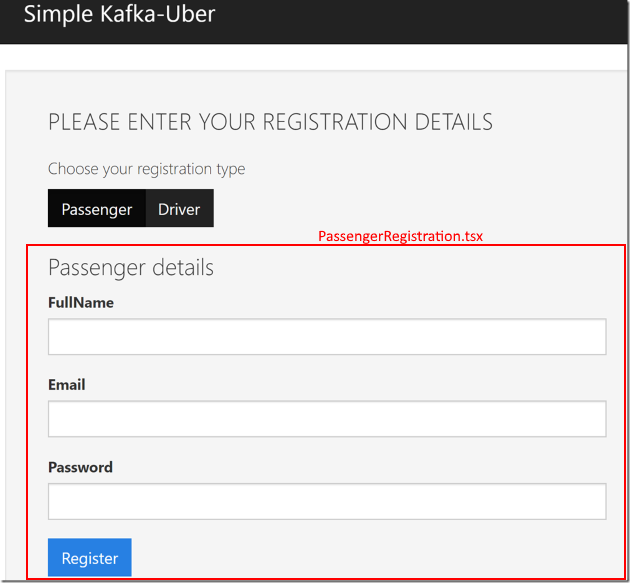

Passenger registration

Registration React component

PlayBackEndApi/FrontEndWebSite/src/PassengerRegistration.tsx is the main file for the React TSX representing the Passenger registration component.

Copy Code

Copy Codeimport * as React from "react"; import * as ReactDOM from "react-dom"; import { OkDialog } from "./components/OkDialog"; import 'bootstrap/dist/css/bootstrap.css'; import { Well, Grid, Row, Col, ButtonInput } from "react-bootstrap"; import { AuthService } from "./services/AuthService"; import { hashHistory } from 'react-router'; import { Form, ValidatedInput } from 'react-bootstrap-validation'; import revalidator from 'revalidator'; let schema = { properties: { fullName: { type: 'string', minLength: 8, maxLength: 60, required: true, allowEmpty: false }, email: { type: 'string', maxLength: 255, format: 'email', required: true, allowEmpty: false }, password: { type: 'string', minLength: 8, maxLength: 60, required: true, allowEmpty: false } } }; export interface PassengerRegistrationProps { authService: AuthService; } export interface PassengerRegistrationState { okDialogOpen: boolean; okDialogKey: number; okDialogHeaderText: string; okDialogBodyText: string; wasSuccessful: boolean; } export class PassengerRegistration extends React.Component<PassengerRegistrationProps, PassengerRegistrationState> { constructor(props: any) { super(props); this.state = { okDialogHeaderText: '', okDialogBodyText: '', okDialogOpen: false, okDialogKey: 0, wasSuccessful: false }; } render() { return ( <Form className="submittable-form-inner" // Supply callbacks to both valid and invalid // submit attempts validateAll={this.validateForm} onInvalidSubmit={this.handleInvalidSubmit} onValidSubmit={this.handleValidSubmit}> <Grid> <Row className="show-grid"> <Col xs={10} md={6}> <h4>Passenger details</h4> </Col> </Row> <Row className="show-grid"> <Col xs={10} md={6}> <ValidatedInput type='text' label='FullName' name='fullName' errorHelp='FullName is invalid' /> </Col> </Row> <Row className="show-grid"> <Col xs={10} md={6}> <ValidatedInput type='text' label='Email' name='email' errorHelp='Email address is invalid' /> </Col> </Row> <Row className="show-grid"> <Col xs={10} md={6}> <ValidatedInput type='password' label='Password' name='password' errorHelp='Password is invalid' /> </Col> </Row> <Row className="show-grid"> <Col xs={10} md={6}> <ButtonInput id="registerBtn" type='submit' bsSize='small' bsStyle='primary' value='Register'>Register</ButtonInput> </Col> </Row> <Row className="show-grid"> <span> <OkDialog open={this.state.okDialogOpen} okCallBack={this.okDialogCallBack} headerText={this.state.okDialogHeaderText} bodyText={this.state.okDialogBodyText} key={this.state.okDialogKey} /> </span> </Row> </Grid> </Form> ) } validateForm = (values) => { let res = revalidator.validate(values, schema); // If the values passed validation, we return true if (res.valid) { return true; } // Otherwise we should return an object containing errors // e.g. { email: true, password: true } return res.errors.reduce((errors, error) => { // Set each property to either true or // a string error description errors[error.property] = true; return errors; }, {}); } handleInvalidSubmit = (errors, values) => { // Errors is an array containing input names // that failed to validate this.setState( { okDialogHeaderText: 'Validation Error', okDialogBodyText: 'Form has errors and may not be submitted', okDialogOpen: true, okDialogKey: Math.random() }); } handleValidSubmit = (values) => { var passenger = values; var self = this; $.ajax({ type: 'POST', url: 'registration/save/passenger', data: JSON.stringify(passenger), contentType: "application/json; charset=utf-8", dataType: 'json' }) .done(function (jdata, textStatus, jqXHR) { var redactedPassenger = passenger; redactedPassenger.password = ""; console.log("redacted ${redactedPassenger}"); console.log(redactedPassenger); console.log("Auth Service"); console.log(self.props.authService); let userProfile = { isDriver: false, user: redactedPassenger }; self.setState( { wasSuccessful: true, okDialogHeaderText: 'Registration Successful', okDialogBodyText: 'You are now registered', okDialogOpen: true, okDialogKey: Math.random() }); self.props.authService.storeUser(userProfile); }) .fail(function (jqXHR, textStatus, errorThrown) { self.setState( { okDialogHeaderText: 'Error', okDialogBodyText: jqXHR.responseText, okDialogOpen: true, okDialogKey: Math.random() }); }); } okDialogCallBack = () => { this.setState( { okDialogOpen: false }); if (this.state.wasSuccessful) { hashHistory.push('/'); } } }There are a couple of things of note there

- That we use a standard POST

- That will post the registration data as JSON to the Play API backend code

- That we also show a standard Bootstrap OkDialog which we looked at last time, which when the Ok button is clicked will use the React Router to navigate to the route page

- That we use the react-bootstrap-validation package for field validation

- That if the registration process is ok we store the user data in the

AuthorisationService.tsthat we saw earlier, where it in turn writes to the browsersessionStorage

Lets now turn our attention to the Play API backend code that goes with the Passenger Registration

Json Support

We need to start with the JSON suport from JavaScript to Scala. This is done using this file PlayBackEndApi/app/Entities/PassengerRegistrationEntities.scala, where we use Play framework JSON combinators

- Reads : Which allows reading a JSON string into a Scala object

- Writes : Which allows a Scala object to be turned into a JSON string

- Format : is just a mix of the Reads and Writes Traits and can be used for implicit conversion in place of its components.

The recommendation for both of these is that they are exposed as implicit vals you can read more about it here : https://www.playframework.com/documentation/2.6.x/ScalaJsonCombinators

Copy Code

Copy Codepackage entities import play.api.libs.json._ import play.api.libs.functional.syntax._ case class PassengerRegistration( fullName: String, email: String, password: String) object PassengerRegistration { implicit val formatter = Json.format[PassengerRegistration] } object PassengerRegistrationJsonFormatters { implicit val passengerRegistrationWrites = new Writes[PassengerRegistration] { def writes(passengerRegistration: PassengerRegistration) = Json.obj( "fullName" -> passengerRegistration.fullName, "email" -> passengerRegistration.email, "password" -> passengerRegistration.password ) } implicit val passengerRegistrationReads: Reads[PassengerRegistration] = ( (JsPath \ "fullName").read[String] and (JsPath \ "email").read[String] and (JsPath \ "password").read[String] )(PassengerRegistration.apply _) }Registration route

Once we have that in place we need to turn our attention to the actual endpoint to support the POST of a PassengerRegistration object. We first need to set up the route in the conf/routes file as follows:

# Registration page POST /registration/save/passenger controllers.RegistrationController.savePassengerRegistration()

Registration controller

So now that we have talked about the JSON Reads/Writes and we know that we need Mongo downloaded and running (see how do I run this section at start), lets see what the actual controller looks like shall we. Here is the FULL code for the Passenger Registration portion of the PlayBackEndApi/app/controllers/RegistrationController.scala

Copy Code

Copy Codepackage controllers import javax.inject.Inject import play.api.mvc.{Action, Controller, Result} import entities._ import entities.DriverRegistrationJsonFormatters._ import entities.PassengerRegistrationJsonFormatters._ import scala.concurrent.{ExecutionContext, Future} import play.modules.reactivemongo._ import play.api.Logger import utils.Errors import play.api.libs.json._ import reactivemongo.api.ReadPreference import reactivemongo.play.json._ import collection._ class RegistrationController @Inject() (val reactiveMongoApi: ReactiveMongoApi) (implicit ec: ExecutionContext) extends Controller with MongoController with ReactiveMongoComponents { def passRegistrationFuture: Future[JSONCollection] = database.map(_.collection[JSONCollection]("passenger-registrations")) def driverRegistrationFuture: Future[JSONCollection] = database.map(_.collection[JSONCollection]("driver-registrations")) def savePassengerRegistration = Action.async(parse.json) { request => Json.fromJson[PassengerRegistration](request.body) match { case JsSuccess(newPassRegistration, _) => //https://github.com/ReactiveMongo/ReactiveMongo-Extensions/blob/0.10.x/guide/dsl.md val query = Json.obj("email" -> Json.obj("$eq" -> newPassRegistration.email)) dealWithRegistration[PassengerRegistration]( newPassRegistration, passRegistrationFuture, query, PassengerRegistration.formatter) case JsError(errors) => Future.successful(BadRequest("Could not build a PassengerRegistration from the json provided. " + Errors.show(errors))) } } private def dealWithRegistration[T]( incomingRegistration: T, jsonCollectionFuture: Future[JSONCollection], query: JsObject, formatter: OFormat[T]) (implicit ec: ExecutionContext): Future[Result] = { def hasExistingRegistrationFuture = jsonCollectionFuture.flatMap { //http://reactivemongo.org/releases/0.11/documentation/advanced-topics/collection-api.html _.find(query) .cursor[JsObject](ReadPreference.primary) .collect[List]() }.map(_.length match { case 0 => false case _ => true } ) hasExistingRegistrationFuture.flatMap { case false => { for { registrations <- jsonCollectionFuture writeResult <- registrations.insert(incomingRegistration)(formatter,ec) } yield { Logger.debug(s"Successfully inserted with LastError: $writeResult") Ok(Json.obj()) } } case true => Future(BadRequest("Registration already exists")) } } }Lets break this down into chunks

- The controller constructor

- This takes a

ReactiveMongoApi(this is mandatory to satisfy the base traitMongoControllerrequirements) - Inherits from

MongoControllerwhich provides a lot of use functionality - It also inherits from

ReactiveMongoComponentsin order to allow the cake pattern/self typing requirements of the baseMongoControllerwhich expects aReactiveMongoComponents

- This takes a

- The use of

JSONCollection- There is a

Future[JSONCollection]that represents the passenger collection in Mongo. This is a collection that stores JSON. When using reactive Mongo you have a choice about whether to use the standard BSON collections of JSON. I opted for JSON

- There is a

- The Guts Of The Logic

- So now we have discussed the controller constructor and the Mongo collections. We just need to talk about the actual work that happens on registration. In a nutshell it works like this

- The incoming JSON string is turned into a

PassengerRegistrationobject via Play - We then create a new JSON query object to query the Mongo

JSONCollectionto see if a registration already exists - If a registration already exists we exit with a

BadRequestoutput - If a registration does NOT already exist we insert the new registration details into the Mongo

JSONCollection, and the we return anOkoutput

- The incoming JSON string is turned into a

- So now we have discussed the controller constructor and the Mongo collections. We just need to talk about the actual work that happens on registration. In a nutshell it works like this

And that is how the Passenger Registration works.

Driver registration

The driver registration works in much the same way as described above, its just slightly different JSON, but it does share the same core logic/controller as the Passenger Registration

Login workflow

The login uses the pieces shown above.

This workflow is VERY similar to registration, so feel free to skip this section if you think you understand how registration concepts work

Login React component

This is the main PlayBackEndApi/FrontEndWebSite/src/Login.tsx file for the React TSX representing the login component.

Copy Code

Copy Codeimport * as React from "react"; import * as ReactDOM from "react-dom"; import { OkDialog } from "./components/OkDialog"; import 'bootstrap/dist/css/bootstrap.css'; import { Well, Grid, Row, Col, ButtonInput } from "react-bootstrap"; import { Form, ValidatedInput } from 'react-bootstrap-validation'; import revalidator from 'revalidator'; import { AuthService } from "./services/AuthService"; let schema = { properties: { email: { type: 'string', maxLength: 255, format: 'email', required: true, allowEmpty: false }, password: { type: 'string', minLength: 8, maxLength: 60, required: true, allowEmpty: false } } }; export interface LoginState { okDialogOpen: boolean; okDialogKey: number; okDialogHeaderText: string; okDialogBodyText: string; } export class Login extends React.Component<undefined, LoginState> { private _authService: AuthService; constructor(props: any) { super(props); console.log(props); this._authService = props.route.authService; this.state = { okDialogHeaderText: '', okDialogBodyText: '', okDialogOpen: false, okDialogKey: 0 }; } render() { return ( <Well className="outer-well"> <Form // Supply callbacks to both valid and invalid // submit attempts validateAll={this.validateForm} onInvalidSubmit={this.handleInvalidSubmit} onValidSubmit={this.handleValidSubmit}> <Grid> <Row className="show-grid"> <Col xs={10} md={6}> <h4>ENTER YOUR LOGIN DETAILS</h4> <span><h6>Or click <a href="#/register">here</a> to register</h6></span> </Col> </Row> <Row className="show-grid"> <Col xs={10} md={6}> <ValidatedInput type='text' label='Email' name='email' errorHelp='Email address is invalid' /> </Col> </Row> <Row className="show-grid"> <Col xs={10} md={6}> <ValidatedInput type='password' name='password' label='Password' errorHelp='Password is invalid' /> </Col> </Row> <Row className="show-grid"> <Col xs={10} md={6}> <ValidatedInput type='checkbox' name='isDriver' label='Are you a driver?' /> </Col> </Row> <Row className="show-grid"> <Col xs={10} md={6}> <ButtonInput id="loginBtn" type='submit' bsSize='small' bsStyle='primary' value='Register'>Login</ButtonInput> </Col> </Row> <Row className="show-grid"> <span> <OkDialog open={this.state.okDialogOpen} okCallBack={this.okDialogCallBack} headerText={this.state.okDialogHeaderText} bodyText={this.state.okDialogBodyText} key={this.state.okDialogKey} /> </span> </Row> </Grid> </Form> </Well> ) } validateForm = (values) => { let res = revalidator.validate(values, schema); // If the values passed validation, we return true if (res.valid) { return true; } // Otherwise we should return an object containing errors // e.g. { email: true, password: true } return res.errors.reduce((errors, error) => { // Set each property to either true or // a string error description errors[error.property] = true; return errors; }, {}); } handleInvalidSubmit = (errors, values) => { console.log(values); // Errors is an array containing input names // that failed to validate this.setState( { okDialogHeaderText: 'Validation Error', okDialogBodyText: 'Form has errors and may not be submitted', okDialogOpen: true, okDialogKey: Math.random() }); } handleValidSubmit = (values) => { var logindetails = values; var self = this; $.ajax({ type: 'POST', url: 'login/validate', data: JSON.stringify(logindetails), contentType: "application/json; charset=utf-8", dataType: 'json' }) .done(function (jdata, textStatus, jqXHR) { console.log("result of login"); console.log(jqXHR.responseText); let currentUser = JSON.parse(jqXHR.responseText); let userProfile = { isDriver: logindetails.isDriver, user: currentUser }; self._authService.storeUser(userProfile); self.setState( { okDialogHeaderText: 'Login Successful', okDialogBodyText: 'You are now logged in', okDialogOpen: true, okDialogKey: Math.random() }); }) .fail(function (jqXHR, textStatus, errorThrown) { self.setState( { okDialogHeaderText: 'Error', okDialogBodyText: jqXHR.responseText, okDialogOpen: true, okDialogKey: Math.random() }); }); } okDialogCallBack = () => { this.setState( { okDialogOpen: false }); } }There are a couple of things of note there

- That we use a standard POST

- That will post the login data as JSON to the Play API backend code

- That we use the react-bootstrap-validation package for field validation

- That if the login process is okay we store the user data in the

AuthorisationService.tsthat we saw earlier, where it in turn writes to the browsersessionStorage

Lets now turn our attention to the Play API backend code that goes with the Login

Json Support

We need to start with the JSON support from JavaScript to Scala. This is done using this file PlayBackEndApi/app/Entities/LoginEntities.scala, where we use Play framework JSON combinators

- Reads : Which allows reading a JSON string into a Scala object

- Writes : Which allows a Scala object to be turned into a JSON string

- Format : is just a mix of the Reads and Writes Traits and can be used for implicit conversion in place of its components.

The recommendation for both of these is that they are exposed as implicit vals you can read more about it here :https://www.playframework.com/documentation/2.6.x/ScalaJsonCombinators

package entities import play.api.libs.json._ import play.api.libs.functional.syntax._ case class Login(email: String, password: String, isDriver: Boolean) object Login { implicit val formatter = Json.format[Login] } object LoginJsonFormatters { implicit val loginWrites = new Writes[Login] { def writes(login: Login) = Json.obj( "email" -> login.email, "password" -> login.password, "isDriver" -> login.isDriver ) } implicit val loginReads: Reads[Login] = ( (JsPath \ "email").read[String] and (JsPath \ "password").read[String] and ((JsPath \ "isDriver").read[Boolean]) )(Login.apply _) }Login route

Once we have that in place we need to turn our attention to the actual endpoint to support the POST of a Login object. We first need to set up the route in the conf/routes file as follows:

# Login page POST /login/validate controllers.LoginController.validateLogin()

Login controller

So now that we have talked about the JSON Reads/Writes and we know that we need Mongo downloaded and running (see how do I run this section at start), lets see what the actual controller looks like shall we. Here is the FULL code for the PlayBackEndApi/app/controllers/LoginController.scala

Copy Code

Copy Codepackage controllers import javax.inject.Inject import entities.DriverRegistrationJsonFormatters._ import entities.PassengerRegistrationJsonFormatters._ import entities._ import play.api.Logger import play.api.libs.json._ import play.api.mvc.{Action, Controller, Result} import play.modules.reactivemongo._ import reactivemongo.api.ReadPreference import reactivemongo.play.json._ import reactivemongo.play.json.collection._ import utils.Errors import scala.concurrent.{ExecutionContext, Future} class LoginController @Inject() (val reactiveMongoApi: ReactiveMongoApi) (implicit ec: ExecutionContext) extends Controller with MongoController with ReactiveMongoComponents { def passRegistrationFuture: Future[JSONCollection] = database.map(_.collection[JSONCollection]("passenger-registrations")) def driverRegistrationFuture: Future[JSONCollection] = database.map(_.collection[JSONCollection]("driver-registrations")) def validateLogin = Action.async(parse.json) { request => Json.fromJson[Login](request.body) match { case JsSuccess(newLoginDetails, _) => newLoginDetails.isDriver match { case false => { val maybePassengerReg = extractExistingRegistration( passRegistrationFuture.flatMap { _.find(Json.obj("email" -> Json.obj("$eq" -> newLoginDetails.email))). cursor[JsObject](ReadPreference.primary). collect[List]() }) returnRedactedRegistration[PassengerRegistration]( maybePassengerReg, (reg: PassengerRegistration) => Ok(Json.toJson(reg.copy(password = ""))) ) } case true => { val maybeDriverReg = extractExistingRegistration( driverRegistrationFuture.flatMap { _.find(Json.obj("email" -> Json.obj("$eq" -> newLoginDetails.email))). cursor[JsObject](ReadPreference.primary). collect[List]() }) returnRedactedRegistration[DriverRegistration]( maybeDriverReg, (reg: DriverRegistration) => Ok(Json.toJson(reg.copy(password = ""))) ) } } case JsError(errors) => Future.successful(BadRequest("Could not build a Login from the json provided. " + Errors.show(errors))) } } private def returnRedactedRegistration[T] ( maybeDriverRegFuture: Future[Option[JsObject]], redactor : T => Result )(implicit reads: Reads[T]): Future[Result] = { maybeDriverRegFuture.map { case Some(json) => { val reg = Json.fromJson[T](json) reg match { case JsSuccess(reg, _) => { redactor(reg) } case _ => BadRequest("Login already exists") } } case None => BadRequest("Could not find login") } } private def extractExistingRegistration[T] (incomingRegistrations: Future[List[T]]) (implicit writes: Writes[T], ec: ExecutionContext): Future[Option[T]] = { incomingRegistrations.map(matchedRegistrations => matchedRegistrations.length match { case 0 => None case _ => Some(matchedRegistrations(0)) } ) } }This is similar enough in nature to the registration process, so I will not go into this any further.

CreateJob workflow

The CreateJob uses the pieces shown above, and is intended to work like this

CreatJob React component

This is the code for the CreateJob React component

Copy Code